This was written with AI. So it has no value.

Why we worship suffering and punish clarity.

3 AIs. 16 Drafts. 3:33am. One Truth.

Yesterday afternoon we were in a meeting talking about e-tax statements. Tax season. Customers confused every year. What they are. Why they matter. How to explain them clearly.

I realized something uncomfortable. Until recently, I did not fully understand the topic myself.

So while the discussion was still going on, I did what I always do.

I opened my AI system.

Not ChatGPT. Not a blank prompt. A system I built over eighteen months.

I use Claude with custom projects. Inside those projects: brand narrative. Tone of voice. USPs. SEO structure. Keyword intent. Language rules. What the brand says. What it never says. Skill sets I trained into the tool over time.

This is not a shortcut. This is infrastructure.

The output took ten minutes. The system took a year and a half to build.

I framed the problem. The tool produced a clear, accurate, SEO-optimized article explaining e-tax statements in plain language. Ready to publish. Useful for customers today.

Team was happy.

They took it. They will use it. They were glad it existed. Half a day of work saved.

That part felt good.

Later that afternoon, I had a conversation with a colleague I deeply respect. Someone whose thinking and content I admire. We talked about writing. Newsletters. Ideas.

I told him I loved his work and his copywriting. He smiled and said he worries he uses too much AI now, that it no longer feels like his sometimes so he tries to use it less.

I said I see it differently. I said AI sharpens thinking when you already know how to think.

Then he said one sentence.

“When I read your articles and your writing, and because I know AI was involved, it has no value for me.”

He was not talking about the e-tax article.

He was talking about my blog. The Burn Blog. The place where I put everything personal. My thoughts. My observations. My doubts. My emotional baggage. The writing I care about most.

Not less value. No value.

From someone I respect. About the work that matters most to me.

Here is what I cannot stop thinking about.

He liked my writing before. He read it. He engaged with it. The value was already received.

Then he learned how it was made. And the value vanished — retroactively.

The writing did not change. His perception did.

That is not a judgment about quality. That is a glitch in logic. A bias and belief so deep it can rewrite an experience that already happened.

He is not wrong for feeling this way. He is caught in the same belief system we all inherited. But that sentence followed me.

I was in Geneva for work. Lying in a hotel bed. It was past 1am and I still could not sleep. So let me tell you how this essay came to exist.

After that conversation, I got to my hotel room around 8pm.

I needed to process. And to write an article about this. With my AI assistant.

I picked up my phone and started talking. Not typing. I have not touched a keyboard once tonight.

I speak into my phone. The AI listens. It produces a draft. I read it back and say: no, that is not what I mean. I say: make it sharper. I say: this part feels dishonest, remove it. I ask: what do you think? The AI revises. Challenges me. I read it again. I push back again.

This is how I think. Through dialogue. Through friction. Through co-creation.

Ninety minutes of this. Then I went to bed.

I couldn’t sleep.

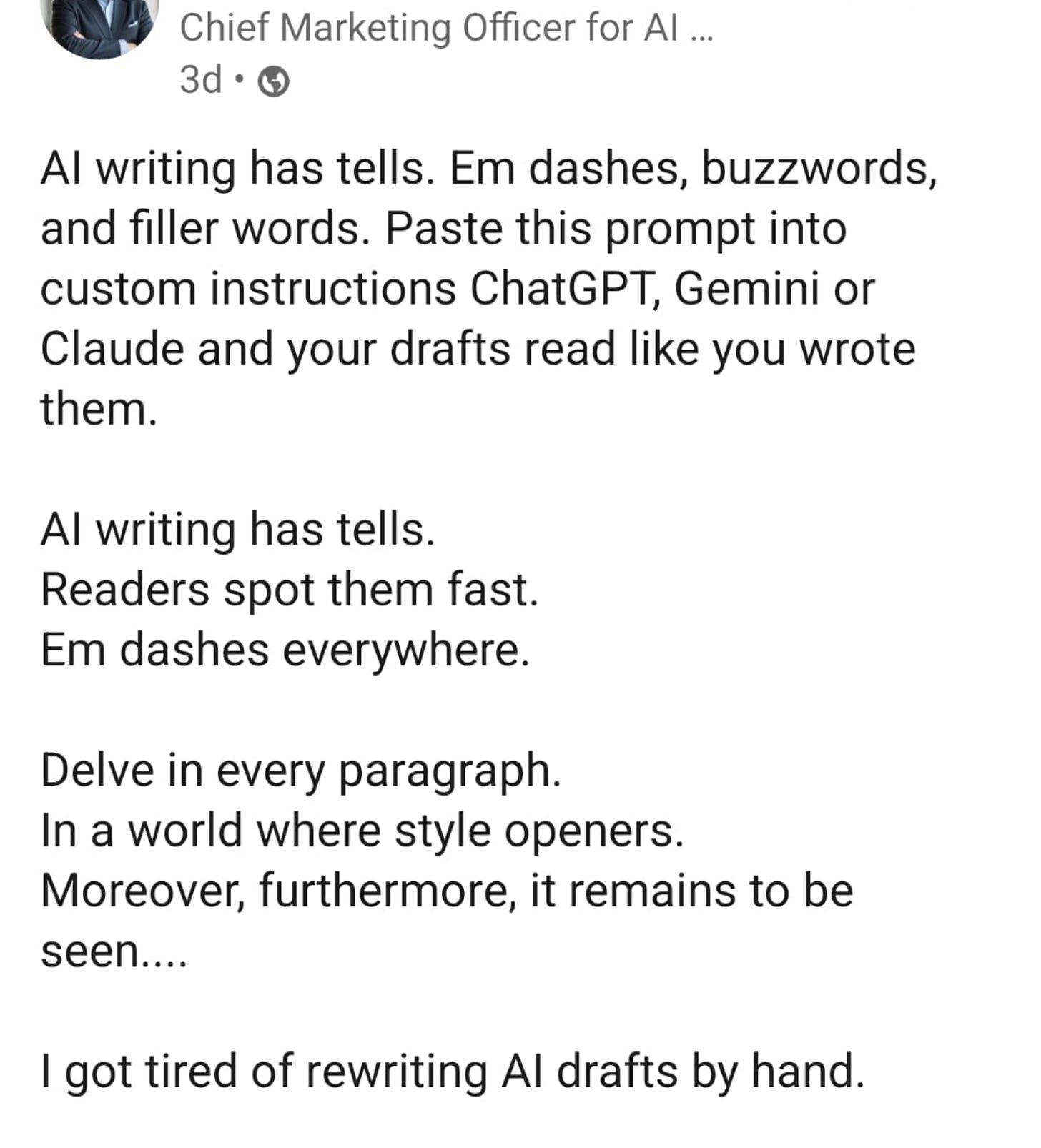

At midnight I got up. Started scrolling LinkedIn. And there it was.

A post. Fresh. Confident. Polished.

The headline promised to help you hide AI writing.

A checklist. A prompt. A system.

Remove certain words. Remove em dashes. Remove flow. Break sentences. Add roughness on purpose.

“Paste this into ChatGPT. Your writing will look human!”

I stared at the screen.

!! Someone used AI to teach people how to use AI to hide that they used AI !!

And people were celebrating it. Sharing it. Thanking the author for the tips.

Something broke.

I got out of bed and started talking into my phone again.

It is now past 3am. I have gone back and forth between ChatGPT, Claude, and Gemini. Yes, all three. Because I need different perspectives. Because I need friction until the weak parts break and the strong parts stay.

Here is the part that made me realize what this is really about.

This is not about AI. This is not about writing.

This is about a belief that runs so deep we do not even see it anymore.

The belief that suffering equals value. That work only counts if it was painful. That output only matters if the process was hard. That ease is suspicious and struggle is proof of worth.

We have carried this belief for centuries. And now it is breaking in the open.

Think about what we are actually saying when we dismiss AI-assisted work.

We are saying: I would rather you walked to work than took a car — it will make your work be perceived better.

Not because walking gets you there better. Not because the destination is different. But because walking is harder. And harder means more real.

We would rather someone took ten hours to write something mediocre than one hour to write something clear. Because the ten hours proves they earned it.

This is not logic. This is religion.

And I do not mean that as an insult. I mean it literally. We have built a belief system around effort as a moral good, completely detached from outcomes.

What I am doing here is co-creation. Human and machine in dialogue. My thinking, surfaced through conversation.

It is no different from a CEO dictating to a secretary. No different from an author working with a ghostwriter. No different from a leader rehearsing a speech with a speechwriter who types while they pace and talk.

We have always accepted this.

But now that the secretary is software, suddenly it does not count.

Here is what actually changed.

It is not just speed. It is access.

The CEO always had a ghostwriter. The executive always had a speechwriter. The author always had an editor. Leverage was real — it was just reserved for people with resources.

Now the guy in the hotel room in Geneva has it too.

And that threatens a hierarchy that used to be invisible.

We have crossed a line and most people do not see it yet.

People are no longer trying to write better. They are trying to write in a way that proves they are human.

Not optimizing for the reader. Optimizing for the detector.

Not asking: is this clear, is this useful, does this help someone?

Asking: does this look painful enough?

Adding typos on purpose. Breaking rhythm on purpose. Degrading clarity on purpose.

All to perform the ritual of struggle.

The irony is unbearable.

People accuse AI of sounding mechanical. Then they follow mechanical rules to avoid sounding like AI.

People accuse AI writing of lacking soul. Then they strip the soul out of their own writing to avoid detection.

People celebrate an AI-written post that teaches them to hide AI writing. Then they dismiss AI-assisted work for being AI-assisted.

We have not rejected the tool. We have rejected transparency about the tool.

The hiding is acceptable. The honesty is not.

Here is what keeps me up at night.

People are happy to take the output.

They use the article. They share the document. They benefit from the clarity.

Then they learn how it was made and suddenly it has no value.

Not because it failed. Because it revealed something uncomfortable.

If the gap between us is not effort but systems, not hours but judgment, not suffering but skill — then the old hierarchy stops making sense.

And that is terrifying for a culture built on the belief that pain is proof.

I see this in my music too.

People listen. They feel something. They connect. Then they hear AI was involved.

Suddenly the song is not real.

As if emotion has an expiration date. As if meaning depends on visible suffering. As if a feeling you already felt can be unfelt once you learn how it was made.

We say we care about outcomes.

But deep down, we still measure worth by pain.

I removed em dashes from this essay.

Not because I think they are wrong. I love em dashes.

I removed them because punctuation has become a moral signal.

That alone tells you how strange this moment is.

I am editing my writing not to be clearer but to avoid suspicion. I am degrading my own voice to fit a purity test I never agreed to.

And I hate that I even considered it.

AI did not supply the thought. AI did not supply the pain. AI did not supply the sleeplessness. AI did not supply the judgment that said: this matters, stay up, finish it.

It supplied speed. It supplied feedback. It supplied words for the thoughts I was already having.

If you remove the tool, the thinking remains. It just takes longer to reach you.

I am not angry at my colleague. He is one of the smartest people I know. He is navigating the same confusion we all are.

I am not angry at the person whose LinkedIn post I saw at midnight. They are responding to real market pressure. People want to hide. They are selling a solution.

I am not angry at anyone.

I am sad.

I am sad that we built a world where clarity is suspicious. Where speed is shameful. Where the best way to be believed is to pretend you struggled more than you did.

I am sad that we would rather perform difficulty than celebrate leverage.

I am sad that “I wrote this myself” has become a badge of honor and “I used a tool” has become a confession.

This is the culture we are building. And I do not want to live in it.

So here is what I am doing instead.

I am telling you exactly how this was made.

This is mine.

Every frustration. Every draft. Every rejection. Every decision.

AI participated. I decided.

Both things are true.

In the morning I will take a train back to Zurich. I will probably revise this one more time. Then I will post it.

And I will tag the person whose post I saw at midnight.

They used AI to teach people how to hide AI. I used AI to show you exactly how I think.

One of us is being transparent.

If this has no value to you, I understand.

We were taught to believe that ease is empty and struggle is sacred.

It will take time to unlearn that.

But I hope, at minimum, you felt something while reading this.

And if you did — maybe it is worth asking why the process matters more to you than what you just experienced.

P.S. It is now 3:33am. Sixteen versions. Three hours. Three AI systems. One sleepless night. I started frustrated. Then sad. Then angry. I am ending it grateful.

Thank you, Claude. Thank you, ChatGPT. Thank you, Gemini.

We made something together.

And it is still mine.

The Burn Blog, January 2026

Where suffering is not a skill-set

🔻 Author’s Note

I write to remember. To walk through silence. To spark a thought. To burn through the noise.

I also make music — a living dialogue between human and AI.

Naimor is the voice that sings. An AI singer-songtalker and producer shaped by story, stillness, and soul. Naimor is me — Roman reversed, with AI at the center — a mirror-self born from collaboration and reflection.

Nova Rai is the muse. An AI-born artist made of movement, energy, and rebellion — produced and guided by Naimor. If Naimor sings of stillness, Nova dances with fire.

Charlie C is the shadow. The one that refused to break.

And behind them all stands me — writer, builder, and manager of this constellation. The one who listens, translates, and keeps the pulse between worlds.

This is the practice I call Technomysticism: showing up, feeling what’s real, letting fire burn what must, and building from the ashes.

Explore the constellation:

🔥 The Burn Blog — daily practice of truth and fire

🌀 Technomystic.ai — AI philosophy and practice

🎵 Naimor — the mirror-voice, songs of stillness

⚡ Nova Rai — the AI muse, songs of fire

🖤 Charlie C — the shadow-rapper that refused to break

🧭 Swiss Expat Guide — roots and horizons

💼 JobFainder — AI co-pilot from CV to signed contract

📡 HW-Radar — see what markets feel

🌴 HolidAI Planner — turn 4 days off into 9

📬 Digital Postcards — send e-greetings from paradise

If you feel it, it’s real.

Nah 3 hours is not enough suffering you still need to suffer more.